⚠️Issue)

When you DROP in Databricks, it is not immediately deleted from S3. This is a device that Databricks has in place in case a user accidentally DROPs a table. When you delete a table, the actual physical file in AWS's S3 is not deleted but is kept for 30 days and is automatically deleted after 30 days.

dropping a managed table does not remove the underlying files

the documentation states that "drop table": Deletes the table and removes the directory associated with the table from the file system if the table is not EXTERNAL table. An exception is thrown if the table does not exist. In case of an external table,

community.databricks.com

If the table is very large, this can be a very costly burden. Therefore, if there is no issue with recovering the table with UNDROP, you can reduce the cost burden by quickly deleting all the files physically stored in S3. However, as users who use Databricks know, folder names are stored in S3 with the ID of the table specified in Databricks.

That's why if I already deleted the table, I get a message saying NOT FOUND TABLE. Normally, you can check the physical S3 location of the table and the ID of the table with DESCRIBE DETAIL table name.

DESCRIBE DETAIL MY_TABLE

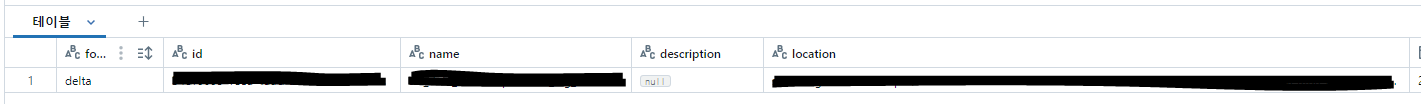

Here is the actual information in detail. However, the problem is when the table does not exist.

You cannot use DESCRIBE DETAIL if the table does not exist. This is because logically the table does not exist and therefore cannot be found.

Then, since we don't know the ID of the table in S3, we have to pay for unnecessary storage space in S3 until 30 days have passed. If it's a small table, it's not a big issue, but if it's tens or hundreds of TB of data, it's a big problem. That's why we have to find it and delete it.

🧐How to find)

1. If DROP TABLE is executed within 7 days

SHOW TABLES DROPPED IN MY_CATALOG.MY_SCHEMA

You can find it through the SHOW TABLES DROPPED command. If it has been less than 7 days since you performed DROP TABLE, you can view the information of the table you deleted like DESCRIBE DETAIL through the command above.

This will retrieve all the information for the corresponding catalog and schema that was deleted within 7 days. If you look up the folder in S3 with the column called tableid, the folder actually exists.

However, this method is not visible after 7 days (by default) because SHOW TABLES DROPPED only stores 7 days. Therefore, if the table I deleted is after 7 days, I have to find it using the following method.

2. If 7 days have passed since DROP TABLE was executed

This is a way to find history using the system table. Databricks provides a log table called aceess.audit You can find it because the deletion (DROP) history remains in this table.

Databricks documentation

docs.databricks.com

select *

from system.access.audit

where action_name = 'deleteTable'

and request_params.full_name_arg like '%MY_TABLE%'

order by event_time desc

If you search for the table name here, the history I deleted will be sorted in ascending order. Here, the table_id is the same as the folder name in S3. In full_name_arg, there is the catalog, schema, and table name of the table I deleted.

If you find the folder like this and delete it from S3, you can immediately get the space back on S3.

Thank you.

'Databricks' 카테고리의 다른 글

| [Databricks] 여러 폴더를 외부(external) 테이블로 만들기 (0) | 2024.12.26 |

|---|---|

| [Databricks] Optimize / VACUUM (4) | 2024.12.02 |

| [Databricks] Workflow Worker(Excutor) scale out 안되는 문제 (0) | 2024.11.11 |

| [Databricks] 이미 삭제(DROP)한 테이블 S3 파일 위치 찾기 (0) | 2024.10.21 |

| [Databricks] DBeaver 연결 방법 (2) | 2024.07.24 |